- 888.396.2465

- Contact us

- Support

- Log In

How does a DataLakeHouse Work?

Above all, the Data LakeHouse represents a concerted focus on achieving business value from your data. The definition of ‘value’ can vary from organization to organization, based on the nature and goals of each individual business’ operation. Like most organizations there is tremendous amounts of unlocked potential in their data. A DataLakeHouse is a platform that provides immediate guidance on how to begin unlocking that potential.

Having a DataLakeHouse allows for first-principles in separation of duties across the data pipeline stack, i.e.: from ingest to egress to achieve that business value. From infrastructure, to governance, from self-service to production reporting, from data collection and model training to Machine Learning Production. That flow of data has many inlets, outlets, and workflows. Some of these are for obvious reasons more pronounced than some of the others. For example, a global sales team may heavily rely on their global CRM implementation, but not be so concerned about Human Resources (HR) data. But perhaps the global CRM implementation relies on some pieces of data from the HR data, for example compensation plan, % of sale incentive, etc. Then that data will need to be capture, stored, organized, protected, and shared for the purpose of helping achieve the global CRM implementation initiative’s goals.

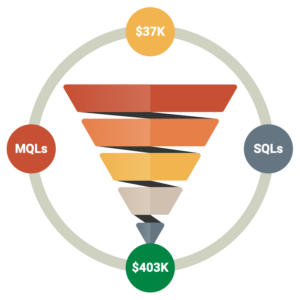

The idea of KPIs (Key Performance Indicators) still heavily exists inside of a DataLakeHouse. As do newer,

Common problems DataLakeHouse solves for:

- Data Integration and Data Pipeline development where use of off the shelf software is the recommendation so developers and analysts can focus on using the data instead of trying to move it from source to a target work repository

- Data engineers, business analysts, and data scientist developing in notebooks and other means working on their local machines, but no idea where their work is, perhaps it’s not checked in to any source control, and the business side of the organization cannot leverage or gain visibility to the hours or days of work that has been done

- Business Value realization

- Path to Production

- Package, certify, roll-out in a controlled fashion, and determine business value, ROI to cost (is it worth the compute and man hours),

- Process Maturity Model (post deployment)

- Complete the circle, automated retraining, reproduce models and how to know when to retrain a model and continue the lifecycle

- Trust high quality data coming into the system

- Reproducibility of the work

- Automation

- MLOps

- CI/CD

- Analytics

Made with Glorious Purpose in the Carolinas

We ❤ Data Teams.

DataLakeHouse.io is a Data Orchestration and Data Security Platform built for People and Customer Insights. As an end-to-end analytics platform it offers a suite of data tools including ELT and PII Data Security, industry-specific pre-built Models with reporting, and AI/Machine Learning to offer actionable insights and predictions for extremely effective business decision-making.

PLATFORM

Compare Data Automation

Use Cases

Industry Solutions

Insights from your data and moving data in and out of your core operational systems should be effortless. Spend your team’s time on high-value target efforts that move the needle for your initiatives. Let DataLakeHouse.io handle the basics with our well-supported ELT and Analytics, Algorithms, Functions, and knowledge of data relationships so you don’t have to.